New on Prime Video: May 2024

If you have an Amazon Prime subscription, you also have access to the Prime Video streaming service. If you have Prime Video, there are a …

If you have an Amazon Prime subscription, you also have access to the Prime Video streaming service. If you have Prime Video, there are a …

The three-week run that Netflix’s alien invasion series 3 Body Problem had enjoyed as the streamer’s biggest TV show in the world has now come …

There’s always one person you know who opens Messages to send a text, then swipes to close the app right after. They say they’re saving …

Apple’s new AI features for iOS 18, iPadOS 18, and macOS 15 are set to be announced during the WWDC 2024 keynote. While we’ve learned …

A new month is around the corner, which means dozens of new movies and shows are coming to Netflix. If you’re looking forward to watching …

The Roomba Combo j7+ is easily one of the smartest robot vacuums that iRobot has ever made. It’s a powerful autonomous vacuum and mop that …

Well, it’s official! After rumors it was happening in March or April and then thinking it might not happen at all, Apple has officially announced …

Wednesday’s top daily deals include a rare discount on the Nintendo Switch OLED console. I personally took advantage of that sale because I recently broke …

Apple wants to convince small businesses and entrepreneurs that the best way to grow their ideas is by using its products. This is why the …

The US Senate voted in favor of legislation that could ban TikTok in the country if ByteDance doesn’t divest its popular app over the next …

Apple’s AirPods Pro are among the best-selling premium earbuds of all time. While it’s true that Apple doesn’t share sales data, we have plenty of …

The iOS 17.5 beta cycle just started, and this might be Apple’s last iOS 17 update for iPhone users before it unveils iOS 18 during …

From TV gems like Acapulco and Ted Lasso to less obvious examples like Eugene Levy’s travel show and even a drama like Drops of God, …

Over the past few years, I’ve been following a project that should pique the interest of any Elder Scrolls fan. Independent studio OnceLost Games was …

On Monday, Marvel Studios debuted the first full trailer for its one and only theatrical release of 2024: Deadpool & Wolverine. After teasing Wolverine back …

NASA’s administrator, Bill Nelson, is once again making some pretty big claims about China’s ongoing space missions. While it is no secret that China’s secret …

Meta’s Ray-Ban glasses aren’t as popular as our phones yet, and they probably never will be. Still, as the company continues to invest in them …

At least once a week, generative AI finds a new way to terrify us. We are still anxiously awaiting news about the next large language …

2024 is proving to be the year that Sydney Sweeney’s star goes stratospheric. Her newest feature film Immaculate, in which she plays an American nun …

One of the most expensive parts of any rocket launch is getting the rocket off the ground and into space. This part of the mission …

Those of us who can’t wait for Steven Knight’s upcoming Peaky Blinders movie can content ourselves with something of a consolation prize in the meantime. …

Apple Vision Pro launched in early February after years of rumors about the company’s plans for mixed reality. Ahead of an international expansion, reliable analyst …

The big news is that there are some Amazon gift card deals that anyone and everyone should take advantage of immediately. Altogether, there’s more than …

watchOS 10.5 beta 3 is now available to Apple Watch users. After a mild update with watchOS 10.4, it seems this next version won’t bring many …

A week after seeding a new beta build of the second visionOS 1.2, Apple is now seeding its third testing version. Although it’s unclear what’s …

tvOS 17.5 beta 3 is now available to everyone with an Apple TV that can run tvOS. At this moment, it’s unclear what this operating …

Apple has just released macOS 14.5 beta 3 to developers. At this moment, it’s unclear what features this software update might bring, as we haven’t …

Apple just released iOS 17.5 beta 3 to developers. This might be Apple’s last iOS 17 update for iPhone users before it unveils iOS 18 during the WWDC 2024 keynote …

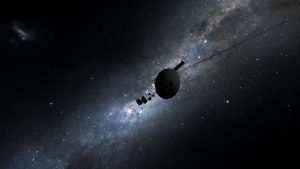

After five months of gibberish, Voyager 1 is finally talking to NASA again. The 46-year-old probe randomly started submitting funky data to NASA back in …

iPad users rejoiced once Apple introduced the Weather app in 2022 with iPadOS 16. However, another iPhone software was still missing from this release, which …

The Samsung Galaxy Tab A8 tablet is one of the best all-around tablets that Samsung makes. It’s the ideal balance between price and performance, giving …